In this lecture:

So remember we talked about lots of unsupervised machine learning tasks including:

- Clustering

- Compression

- Data visualization

But there is another unsupervised task we haven't talked about yet: (generation). Think about what image/text generation is. It is the model's ability to generate something it has never seen before.

How do we train a model to create a new piece of data that has never been revealed before? This can't be supervised learning right? So what is it?

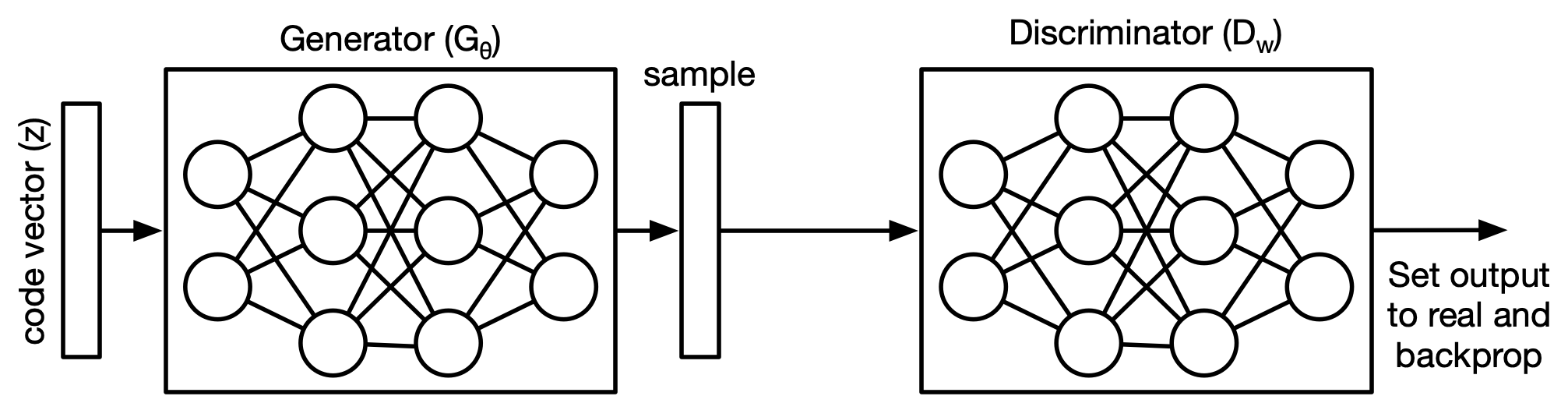

Implicit generative model implicitly defines a probability distribution.

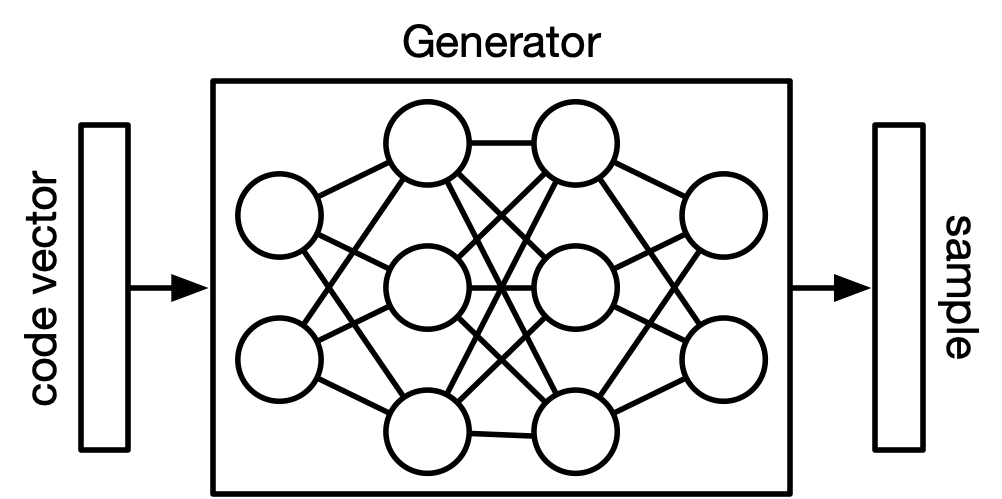

You start by sampling a fixed, sample distribution and assigning this to be a code vector.

Then the generator network computes a differential function that maps a sample to a one of the piece of data in your data.

Remember our PCA where we visualised datasets on a 2D pane

** quick reference back to Andrej Karpathy's data set visualization: https://cs.stanford.edu/people/karpathy/cnnembed/cnn_embed_6k.jpg

We want to model generative model to recreate that image if fed a random distribution of inputs:

from [1]

but how do we train such a network?

If we simply map some random inputs to some pieces of data in the dataset, all that will do is ask the network to replicate the images dataset exactly. We need to be able to tell the network

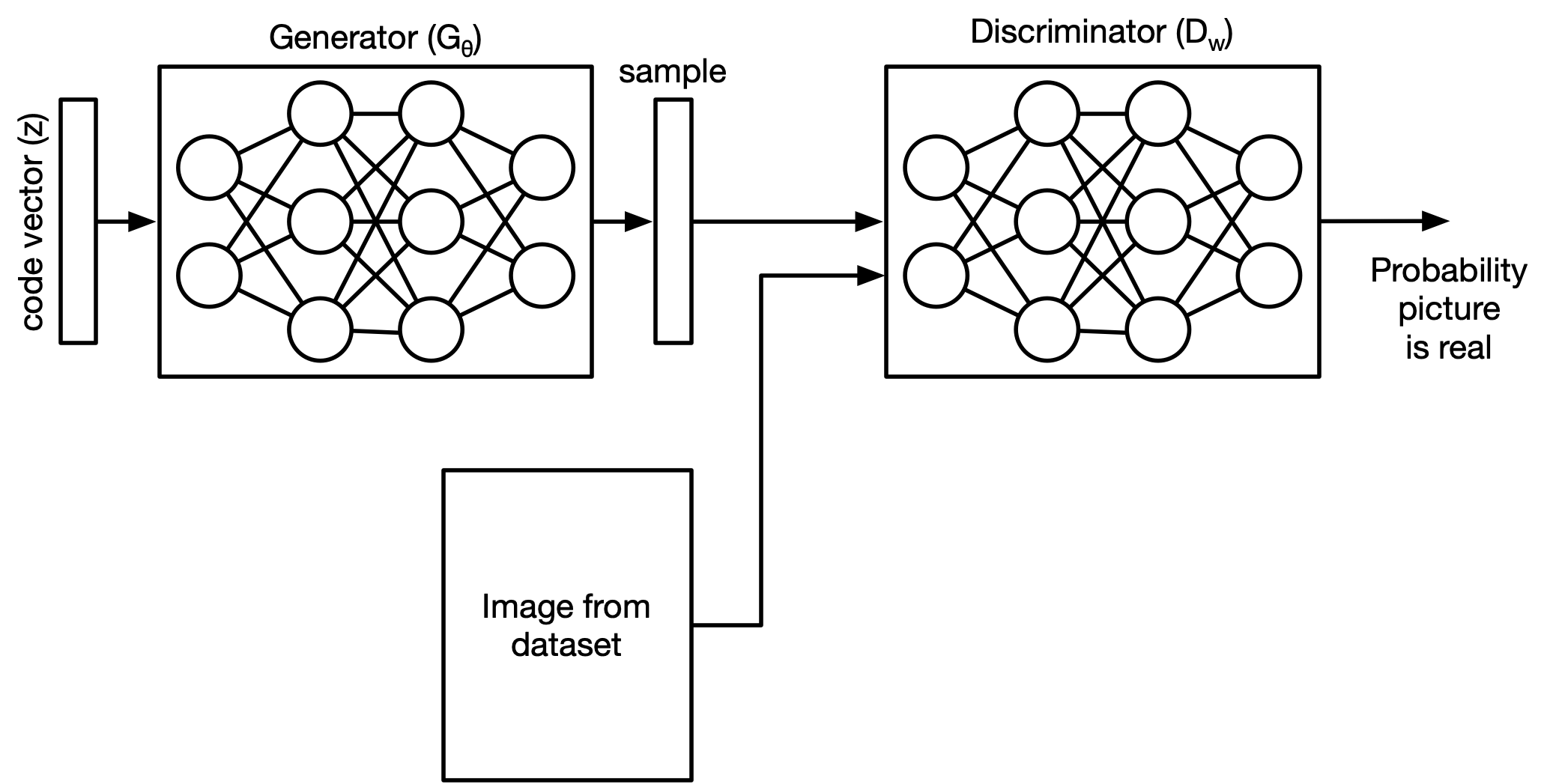

"This image you created looks [or does not look] like it came from the same dataset."

We need somethign to dricriminate between images that can be part of the dataset, and images that are not part of the dataset.

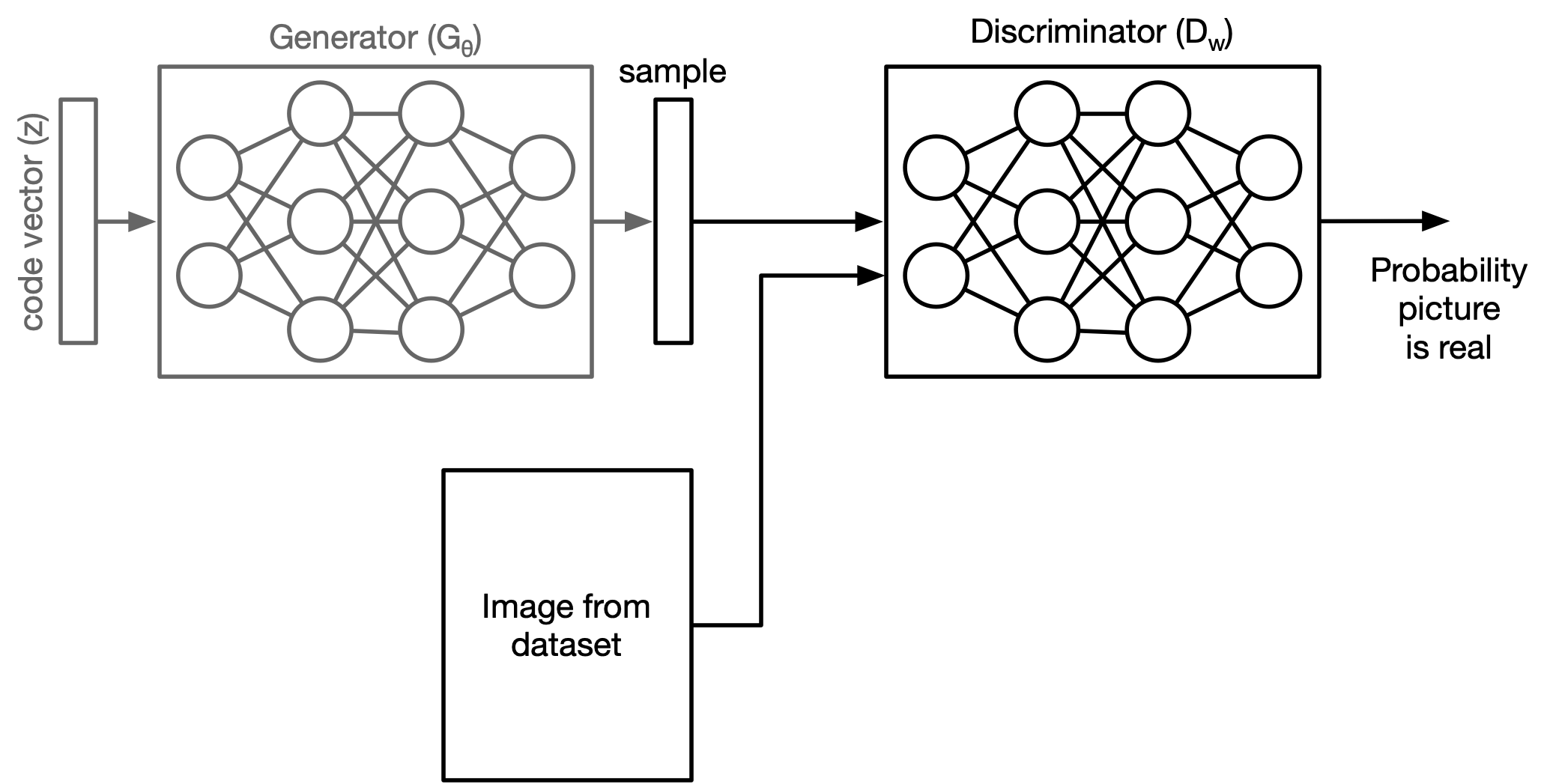

The idea behind GANs is to train two different networks at once:

Let's look at what we're trying to optimize mathematically:

How to choose the parameters for the discriminator ($w$):

$$ \mathcal{J}_D = -\Sigma_x \log D_w(x) - \Sigma_z \log\left(1-D_w(G_\theta(z))\right) $$We want to minimize $\mathcal{J}_D$.

On the otherhand, the cost function for the generator is the reverse:

$$ \begin{align} \mathcal{J}_G &= -\mathcal{J}_D \\ &= const - \Sigma_z \log\left(1-D_w(G_\theta(z))\right) \end{align} $$when want to maximize $\mathcal{J}_G$ because we want the gerator to be really good at fooling the discriminator.

This is call minimax formulation. The generator and discriminator are playign a zero sum game against one another so you get a formulation of the form:

$$ \max_\theta\min_w \mathcal{J}_D $$Want:

$$ \max_\theta\min_w -\Sigma_x \log D_w(x) - \Sigma_z \log\left(1-D_w(G_\theta(z))\right) $$but looking at just the optimization of the generator:

$$ \max_\theta - \Sigma_z \log\left(1-D_w(G_\theta(z))\right) $$So if the generator is really good $D_w(G_\theta(z))$ will approach 1 which will make the total expression very large which is what we want. But what if the discriminator is good but the generator is really bad? Then loss function will be near 0 which is far from the max ... but it also means that the gradient is super small?

Remember with logistic regression how if the model was confidently wrong, then the gradient was small so we needed to do binary cross entropy to fix this issue? This is called the saturation problem.

Same thing here. Let's reformulate the loss function:

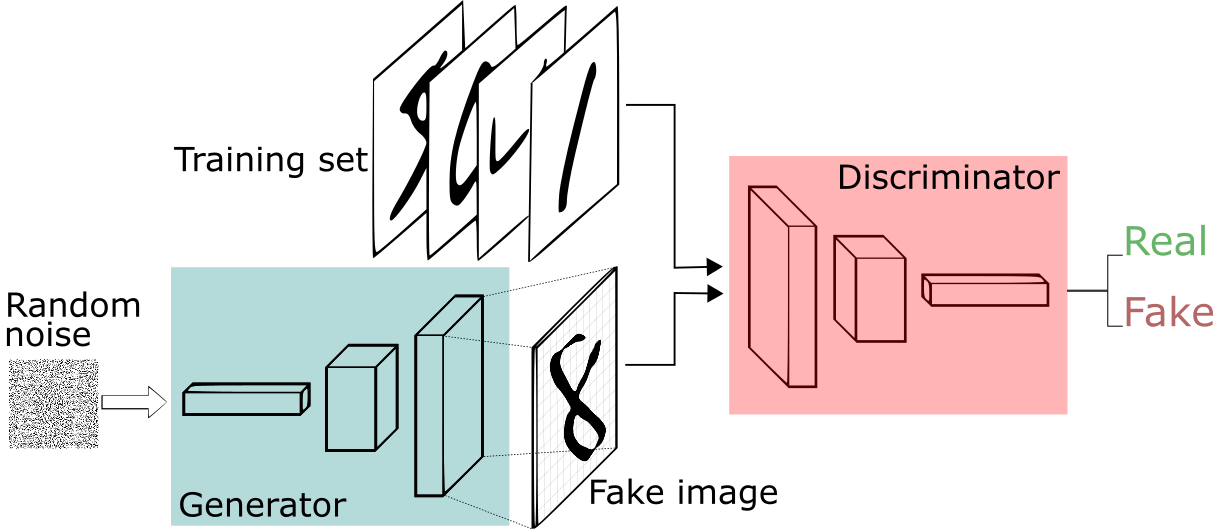

$$ \min_\theta - \Sigma_z \log\left(D_w(G_\theta(z))\right) $$Let's say we want to create more MNIST numerical digit images. How do we do it?

picture from [

picture from [

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import datasets, transforms

import matplotlib.pyplot as plt

# Set the computation device: GPU if available, else CPU.

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# Generator model definition

class Generator(nn.Module):

def __init__(self, latent_dim):

super(Generator, self).__init__()

self.model = nn.Sequential(

nn.Linear(latent_dim, 128),

nn.ReLU(inplace=True),

nn.Linear(128, 256),

nn.BatchNorm1d(256, 0.8),

nn.ReLU(inplace=True),

nn.Linear(256, 512),

nn.BatchNorm1d(512, 0.8),

nn.ReLU(inplace=True),

nn.Linear(512, 28 * 28),

nn.Tanh()

)

def forward(self, z):

img = self.model(z)

img = img.view(img.size(0), 1, 28, 28)

return img

# Discriminator model definition

class Discriminator(nn.Module):

def __init__(self):

super(Discriminator, self).__init__()

self.model = nn.Sequential(

nn.Linear(28 * 28, 512),

nn.LeakyReLU(0.2, inplace=True),

nn.Linear(512, 256),

nn.LeakyReLU(0.2, inplace=True),

nn.Linear(256, 1),

nn.Sigmoid()

)

def forward(self, img):

# Flatten the image from (batch, 1, 28, 28) to (batch, 784)

img_flat = img.view(img.size(0), -1)

validity = self.model(img_flat)

return validity

# Hyperparameters

latent_dim = 100

batch_size = 64

epochs = 300

# Initialize generator and discriminator and move to device.

generator = Generator(latent_dim).to(device)

discriminator = Discriminator().to(device)

# Optimizers and loss criterion

optimizer_G = optim.Adam(generator.parameters(), lr=0.0002, betas=(0.5, 0.999))

optimizer_D = optim.Adam(discriminator.parameters(), lr=0.0002, betas=(0.5, 0.999))

adversarial_loss = nn.BCELoss()

# DataLoader for the MNIST dataset.

dataloader = torch.utils.data.DataLoader(

datasets.MNIST(

"./data", train=True, download=True,

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.5,), (0.5,))

])

),

batch_size=batch_size, shuffle=True

)

# For recording average loss per epoch.

epoch_d_losses = []

epoch_g_losses = []

# This list will store the 5-image samples from every 20th epoch.

sample_images_rows = []

We need to train the two models independently. So how do we do that?

# Training loop.

for epoch in range(epochs):

epoch_d_loss = 0.0

epoch_g_loss = 0.0

for i, (imgs, _) in enumerate(dataloader):

# Transfer images to device.

imgs = imgs.to(device)

valid = torch.ones((imgs.size(0), 1), device=device)

fake = torch.zeros((imgs.size(0), 1), device=device)

# Train Generator.

optimizer_G.zero_grad()

z = torch.randn(imgs.size(0), latent_dim, device=device)

gen_imgs = generator(z)

g_loss = adversarial_loss(discriminator(gen_imgs), valid)

g_loss.backward()

optimizer_G.step()

# Train Discriminator.

optimizer_D.zero_grad()

real_loss = adversarial_loss(discriminator(imgs), valid)

fake_loss = adversarial_loss(discriminator(gen_imgs.detach()), fake) ## detach is important so we don't mess with the gradient in the generator

d_loss = (real_loss + fake_loss) / 2

d_loss.backward()

optimizer_D.step()

epoch_g_loss += g_loss.item()

epoch_d_loss += d_loss.item()

if i % 100 == 0:

print(f"[Epoch {epoch+1}/{epochs}] [Batch {i}/{len(dataloader)}] "

f"[D loss: {d_loss.item():.4f}] [G loss: {g_loss.item():.4f}]")

# Average losses for the epoch.

avg_d_loss = epoch_d_loss / len(dataloader)

avg_g_loss = epoch_g_loss / len(dataloader)

epoch_d_losses.append(avg_d_loss)

epoch_g_losses.append(avg_g_loss)

# Every 20th epoch, generate and store a sample of 5 images.

if (epoch + 1) % 20 == 0:

with torch.no_grad():

sample_z = torch.randn(5, latent_dim, device=device)

sample_imgs = generator(sample_z).detach().cpu()

sample_images_rows.append(sample_imgs)

print("Saved sample images for epoch", epoch+1)

# Plot the average generator and discriminator losses over epochs.

plt.figure(figsize=(10, 5))

plt.plot(range(1, epochs+1), epoch_d_losses, label="Discriminator Loss", marker='o')

plt.plot(range(1, epochs+1), epoch_g_losses, label="Generator Loss", marker='o')

plt.title("Average Generator and Discriminator Loss per Epoch")

plt.xlabel("Epoch")

plt.ylabel("Loss")

plt.legend()

plt.grid(True)

plt.show()

# Combine all sample rows (from every 20th epoch) into one grid.

num_samples = len(sample_images_rows) # Should be 15 for 300 epochs.

fig, axes = plt.subplots(num_samples, 5, figsize=(15, num_samples * 3))

for row_idx, sample_imgs in enumerate(sample_images_rows):

for col_idx in range(5):

axes[row_idx, col_idx].imshow(sample_imgs[col_idx].view(28, 28), cmap='gray')

axes[row_idx, col_idx].axis('off')

plt.suptitle("Sample Images Every 20th Epoch", fontsize=16)

plt.tight_layout()

plt.show()

Several parts to GPU training:

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")generator = Generator(latent_dim).to(device)

discriminator = Discriminator().to(device)imgs = imgs.to(device)

valid = torch.ones((imgs.size(0), 1), device=device)

fake = torch.zeros((imgs.size(0), 1), device=device)

z = torch.randn(imgs.size(0), latent_dim, device=device)device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') model.to(device)

for batch_idx, (inputs, targets) in enumerate(dataloader): inputs = inputs.to(device) # Move batch to device targets = targets.to(device) # Move batch to device

# Forward pass, loss calculation, etc.

```

[1] OpenAI "Generative models" - https://openai.com/index/generative-models/

[2] Lindernoren, Eric "PyTorch-GAN" - https://github.com/sw-song/PyTorch-GAN/tree/master

[3] Goodfellow, Ian J., et al. "Generative adversarial nets." Advances in neural information processing systems 27 (2014).

[4] Karras et al., 2017. Progressive growing of GANs for improved quality, stability, and variation

[5] Zhu, Jun-Yan, et al. "Unpaired image-to-image translation using cycle-consistent adversarial networks." Proceedings of the IEEE international conference on computer vision. 2017.

[6] Roger, Grosse "Lecture 19 Slides" - https://www.cs.toronto.edu/~rgrosse/courses/csc321_2018/slides/lec19.pdf

[7] Zhang, Han, et al. "Stackgan: Text to photo-realistic image synthesis with stacked generative adversarial networks." Proceedings of the IEEE international conference on computer vision. 2017.

[8] SW-Song "PyTorch🔥 GAN Basic Tutorial for beginner" - https://www.kaggle.com/code/songseungwon/pytorch-gan-basic-tutorial-for-beginner/notebook